- AI Engineering

- Posts

- Train LLMs locally with zero setup

Train LLMs locally with zero setup

.. PLUS: Open Source Meta-Agent Framework

In today’s newsletter:

Unsloth Docker Image: Train LLMs Locally with Zero Setup

Mem0: Build AI Agents with scalable long-term memory

ROMA: Open Source Meta-Agent Framework

Reading time: 3 minutes.

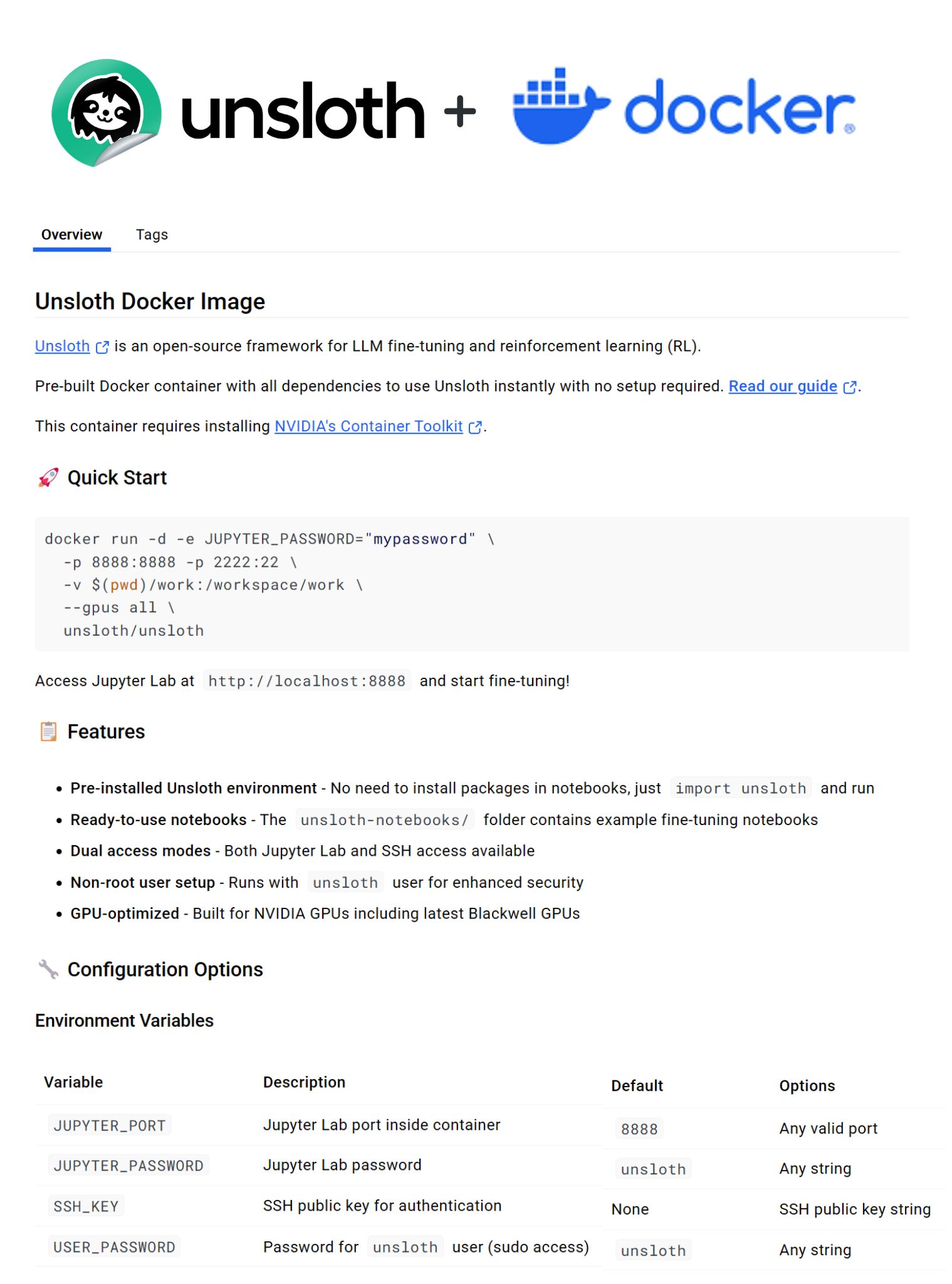

Unsloth AI, released a Docker image that lets you train LLMs locally with zero setup.

Local training is often complex due to dependency issues or broken environments.

With this Docker image, you can bypass those problems entirely. Just pull, run, and start training.

Key Features:

Pre-installed Unsloth environment - No need to install packages in notebooks, just

import unslothand runReady-to-use notebooks - The

unsloth-notebooks/folder contains example fine-tuning notebooksDual access modes - Both Jupyter Lab and SSH access available

Non-root user setup - Runs with

unslothuser for enhanced securityGPU-optimized - Built for NVIDIA GPUs including latest Blackwell GPUs

It’s 100% Open Source.

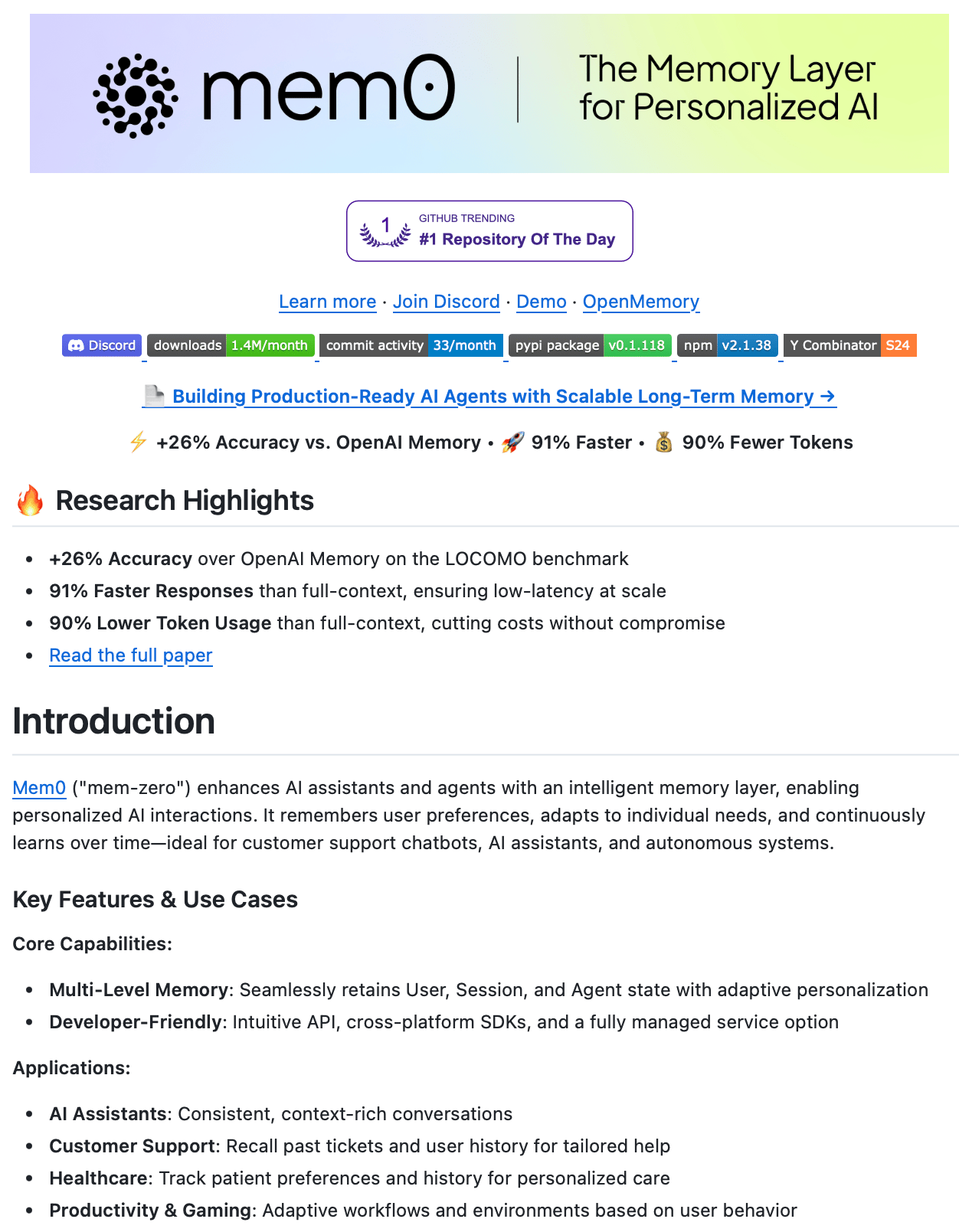

Mem0 is a fully open-source memory layer that gives AI agents persistent, contextual memory across sessions.

Without memory, agents reset after every interaction, losing context and continuity, which limits their usefulness in real-world applications.

Mem0 solves this by storing and managing long-term context efficiently, ensuring agents can learn and evolve with every conversation.

Here’s how it works:

Memory extraction – Uses LLMs to identify and store key facts from conversations while preserving full context

Memory filtering & decay – Prevents memory bloat by removing or aging irrelevant data over time

Hybrid storage – Combines vector search for semantic lookup with graph-based relationships for structured context

Smart retrieval – Prioritizes memories based on relevance, recency, and importance

Efficient context handling – Reduces token usage by sending only the most relevant memory snippets back to the model

It’s 100% Open Source.

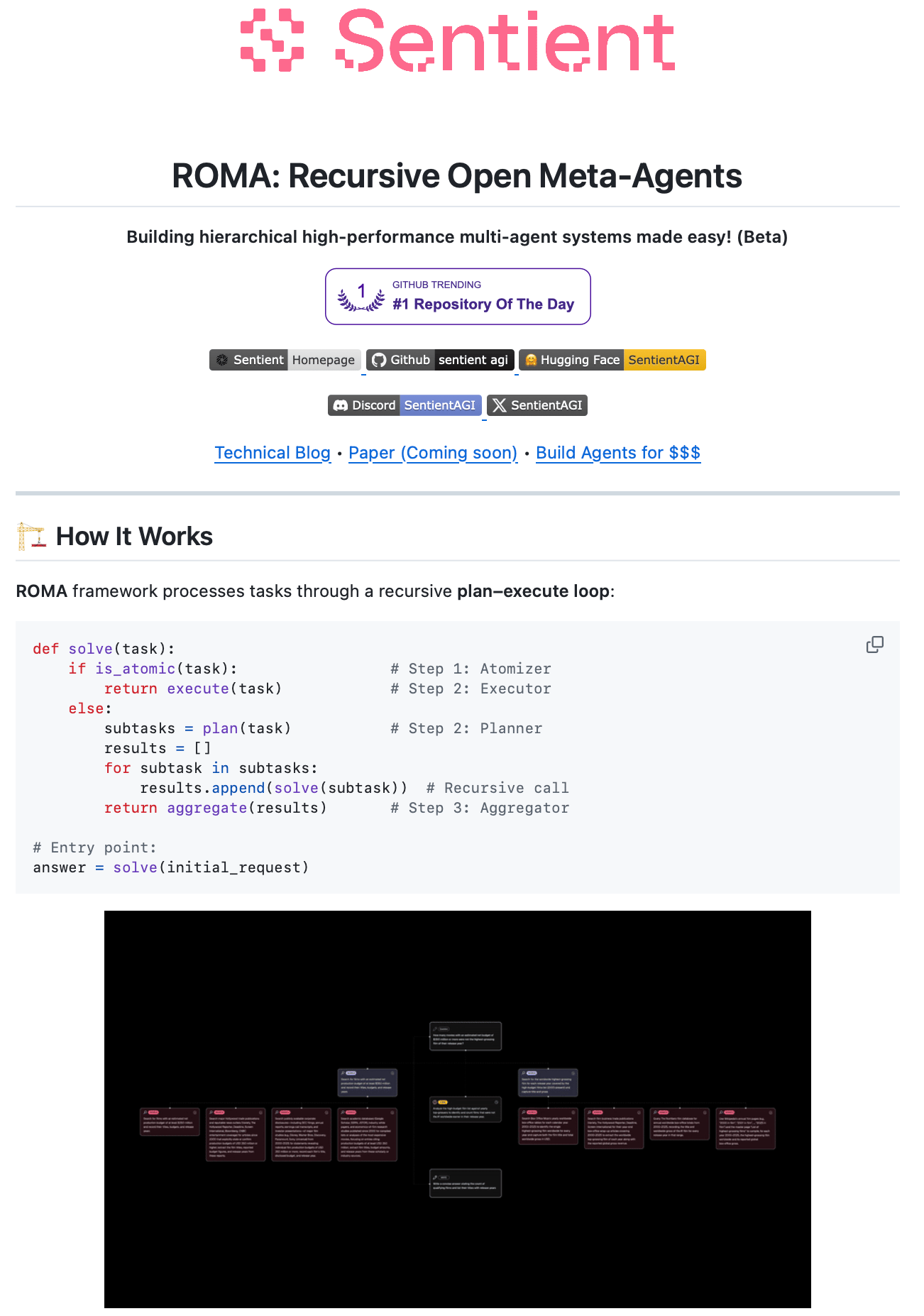

Meta-agent framework for building high-performance multi-agent systems!

ROMA is an open-source meta-agent framework for building agents with hierarchical task execution.

It adopts a recursive hierarchical architecture where tasks are decomposed into subtasks, agents handle the subtasks, and results are aggregated upward.

The goal is to simplify the development of complex agent workflows by making task decomposition, coordination, and tracing more manageable.

Key components:

Atomizer: Determines whether a task is “atomic” (directly executable) or requires planning.

Planner: Breaks down non-atomic tasks into subtasks.

Executor: Executes atomic tasks using LLMs, APIs, or even other agents.

Aggregator: Collects results from subtasks and merges them into a parent output.

The recursion loop follows: solve(task) → decompose → solve(subtasks) → aggregate results.

That’s a Wrap

That’s all for today. Thank you for reading today’s edition. See you in the next issue with more AI Engineering insights.

PS: We curate this AI Engineering content for free, and your support means everything. If you find value in what you read, consider sharing it with a friend or two.

Your feedback is valuable: If there’s a topic you’re stuck on or curious about, reply to this email. We’re building this for you, and your feedback helps shape what we send.

WORK WITH US

Looking to promote your company, product, or service to 150K+ AI developers? Get in touch today by replying to this email.