- AI Engineering

- Posts

- Hands-On LLM Course

Hands-On LLM Course

.. PLUS: Open standard for sharing AI agents

In today’s newsletter:

Hands-on LLM course with roadmaps + Colab notebooks

Open standard for sharing AI agents (.af)

NVIDIA research on small language models for agentic AI

Reading time: 3 minutes.

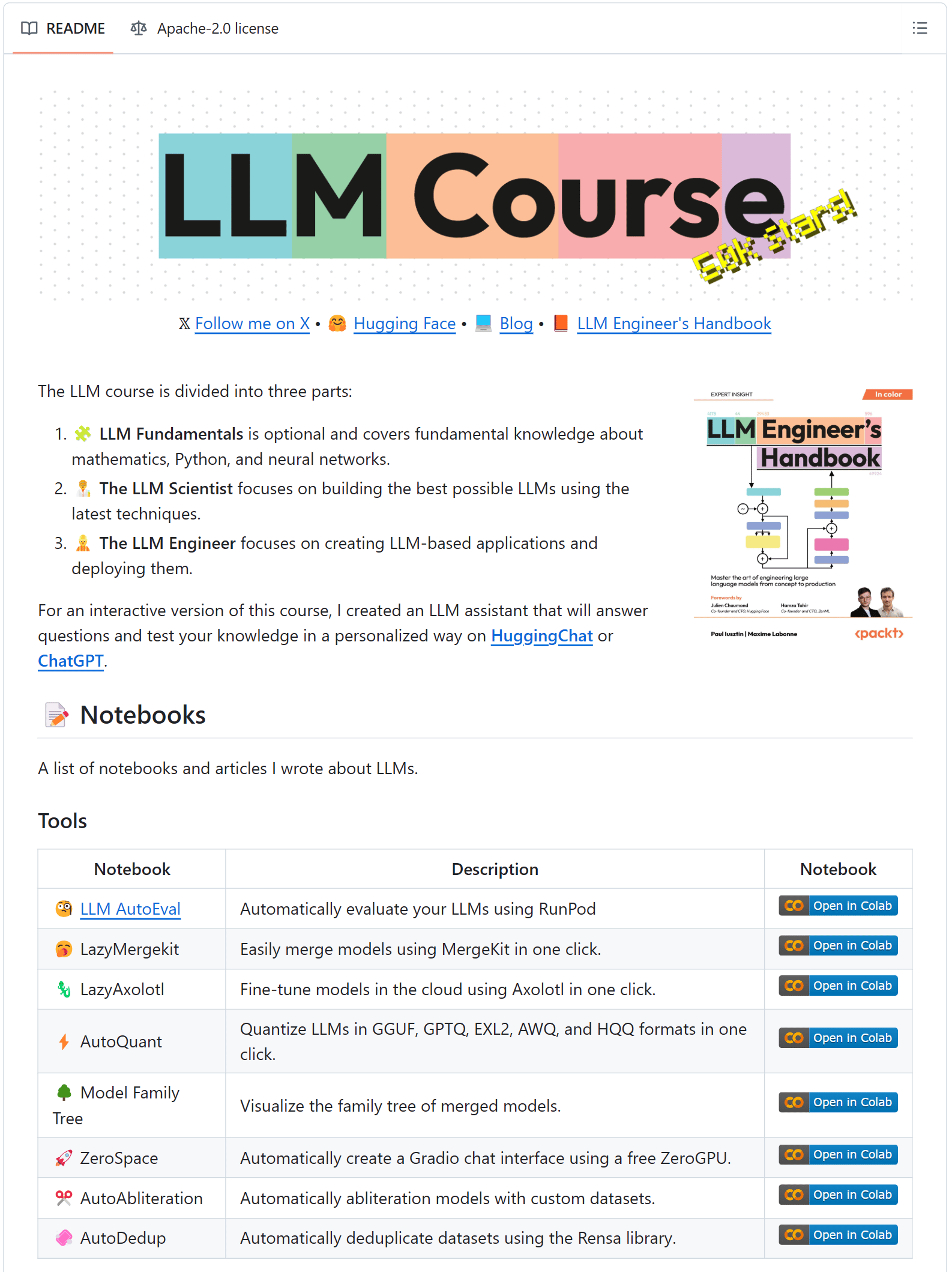

The best hands-on course to learn Large Language Models with roadmaps and Colab notebooks!

The LLM Course covers end-to-end LLM application lifecycle from design to deployment.

The course is divided into three parts:

LLM Fundamentals covers fundamental knowledge about mathematics, Python, and neural networks.

The LLM Scientist focuses on building the best possible LLMs using the latest techniques.

The LLM Engineer focuses on creating LLM-based applications and deploying them.

The entire course is open-source and available on GitHub.

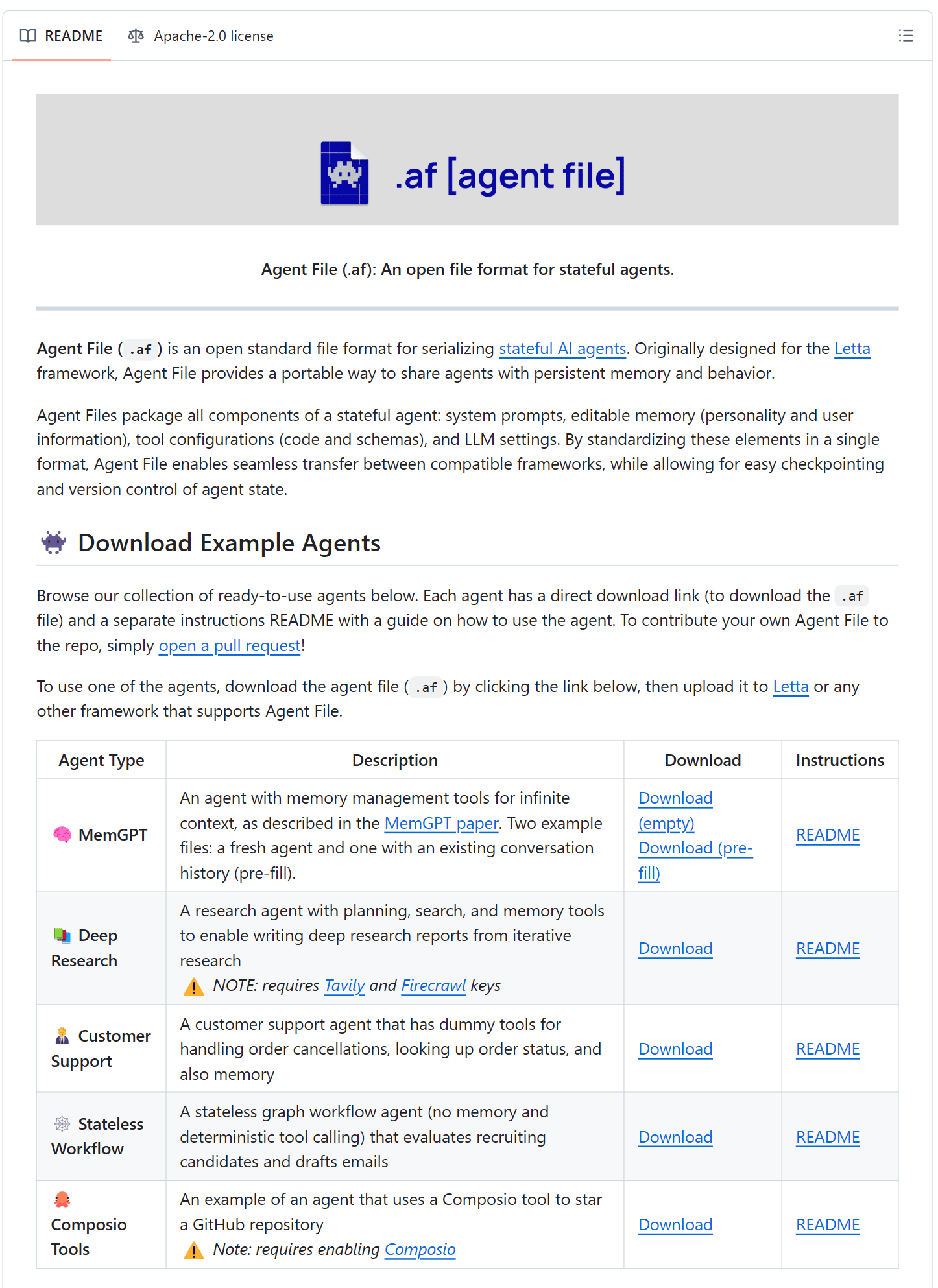

Agent File (.af) is an open standard for serializing the full state of an AI agent in a single, portable JSON file.

It packages everything an agent needs, including system prompts, editable memory, tool configurations, and LLM settings along with environment variables.

Why it matters:

Portability - move agents across frameworks or environments

Collaboration - share agents with teams or publish them

Preservation - save snapshots for reproducibility

Versioning - track changes as your agents evolve

What’s inside a .af file:

Model configuration - Model name, embedding model, context limits

System prompt - Defines core agent behavior

Message history - Full chat logs with annotations

Memory blocks - Personality and user data

Tool rules - Execution constraints and sequencing

Environment variables - Runtime configs for tools

Tools - Source code and schema definitions

It’s 100% Open Source

NVIDIA Research recently published a paper exploring how Small Language Models (SLMs) can play a bigger role in agentic systems.

The key idea: instead of relying solely on large, general-purpose LLMs, SLMs can be integrated into pipelines for focused, tool-like, and repetitive tasks, often with similar effectiveness.

Why consider SLMs for agentic AI?

High accuracy on well-defined, repetitive tasks

Lower memory usage and faster inference

Significant cost savings over time

Easy integration alongside LLMs for broader reasoning

Key takeaways:

SLM performance is improving rapidly, even at smaller parameter sizes.

Migrating from LLMs to SLMs for certain tasks is more straightforward than expected.

Getting started:

Audit where LLMs are used and identify tasks that could be handled by SLMs.

Use distillation and fine-tuning to adapt SLMs for those subtasks.

Gradually integrate SLMs into your pipeline, monitor, and refine.

If you’re building agentic systems, this is worth a deeper look.

That’s a Wrap

That’s all for today. Thank you for reading today’s edition. See you in the next issue with more AI Engineering insights.

PS: We curate this AI Engineering content for free, and your support means everything. If you find value in what you read, consider sharing it with a friend or two.

Your feedback is valuable: If there’s a topic you’re stuck on or curious about, reply to this email. We’re building this for you, and your feedback helps shape what we send.

WORK WITH US

Looking to promote your company, product, or service to 140K+ AI developers? Get in touch today by replying to this email.