- AI Engineering

- Posts

- 5 No-Code LLM, AI Agent Builders

5 No-Code LLM, AI Agent Builders

.. PLUS: Build and launch your own AI agents

In today’s newsletter:

Apify Actors - Build and launch your own AI agents

5 No-code LLM, AI Agent Builders

Reading time: 3 minutes.

1M$ Challenge — TOGETHER WITH APIFY

Apify lets you turn any projects into a runnable micro-app called an Actor. Each Actor runs as a self-contained unit with defined inputs, outputs, and its own runtime.

You can package agents, MCP servers, crawlers, document analyzers, or AI tools that extract, parse, and summarize data, then deploy them as reusable Actors.

Apify is running the $1M Challenge. You can build and publish Actors for real-world use cases, with top projects earning up to $30K in cash prizes along with weekly rewards and community visibility.

Now, let’s dive back into the newsletter!

5 No-code LLM, AI Agent Builders

Building AI agents goes beyond prompt chaining. Real systems require workflow control, tool execution, document access, and visibility into agent behavior.

Proprietary builders simplify setup but limit control when you need deeper integrations, RAG, or self-hosted deployments.

Open-source no-code builders expose execution and configuration through UI-driven tools, letting you build agents, workflows, and retrieval pipelines without hand-written orchestration.

Below are five open-source, no-code builders for AI agents, RAG-based systems, and multi-step workflows.

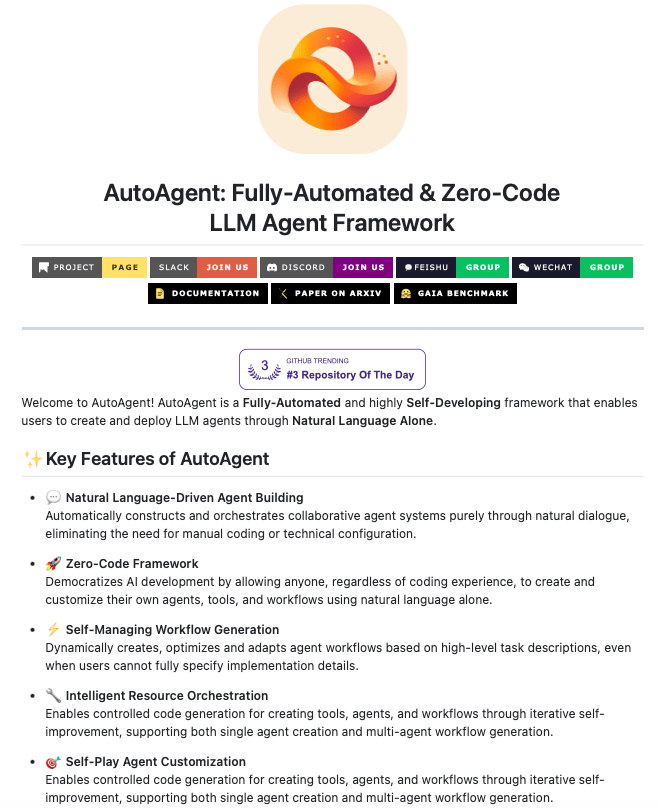

1. AutoAgent

AutoAgent is a fully automated, zero-code LLM agent framework designed to execute end-to-end tasks from a high-level goal.

You define what you want to achieve in natural language, and the system handles task decomposition, planning, execution, and iteration without manual configuration.

Key Features:

No manual agent wiring or prompt engineering

Automatic task planning and execution loop

Designed for hands-off, goal-driven agent runs

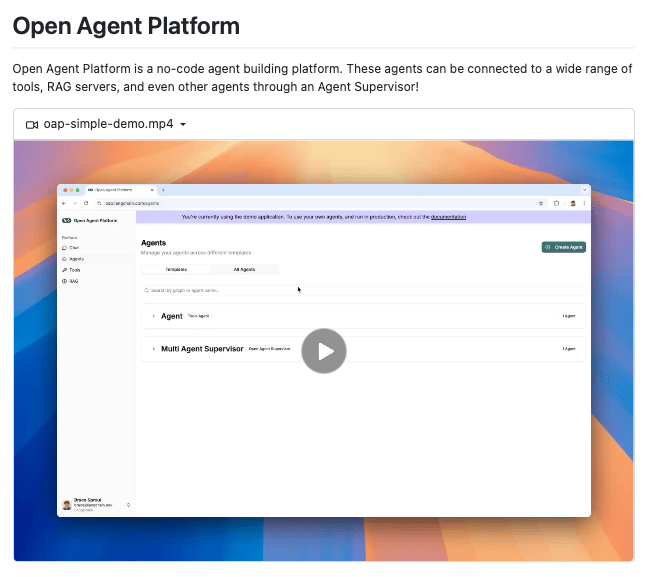

2. LangChain Open Agent Platform

LangChain Open Agent Platform is a no-code UI built on top of LangGraph, exposing graph-based agent orchestration used in LangChain production systems.

Instead of hiding execution, it makes agent flow explicit through nodes, edges, and supervision logic. This is useful when you want control over routing, retries, and multi-agent coordination without writing graph code.

Key Features:

Visual interface for LangGraph-based agents

Supports supervisor and multi-agent patterns

Integrates naturally with LangChain tooling and RAG

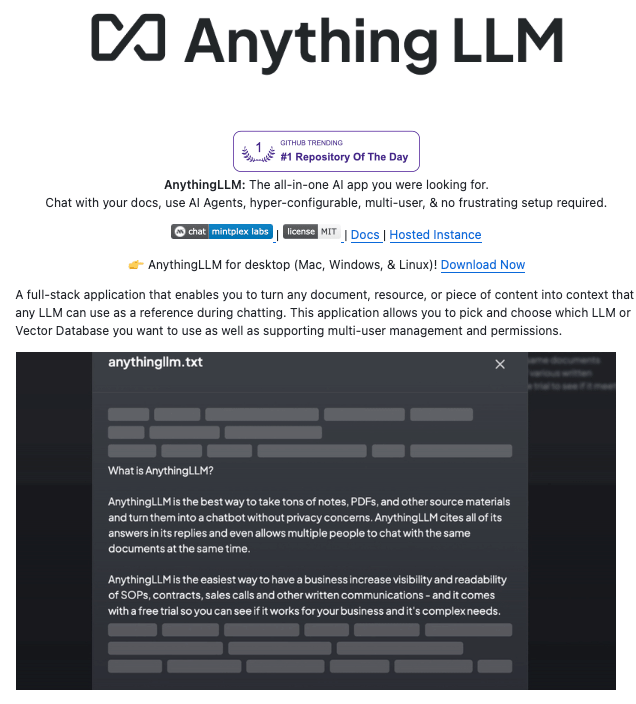

3. AnythingLLM

AnythingLLM is a self-hosted, all-in-one platform for building internal AI tools around documents, chat, and agents.

It combines RAG, agent workflows, and knowledge bases into a single workspace that both technical and non-technical teams can use.

Key Features:

Built-in document ingestion and vector storage

Visual agent builder with tool and browsing support

Designed for internal knowledge assistants and team tools

4. Dify

Dify is an open-source platform for building and operating LLM-powered applications and agents, with a strong focus on UI-driven configuration and observability.

It supports prompt management, RAG pipelines, agent logic, and runtime monitoring, making it suitable for teams running AI features in production.

Key Features:

UI-first approach to LLM apps and agents

Supports RAG, tools, and multi-step workflows

Includes logging, monitoring, and versioning

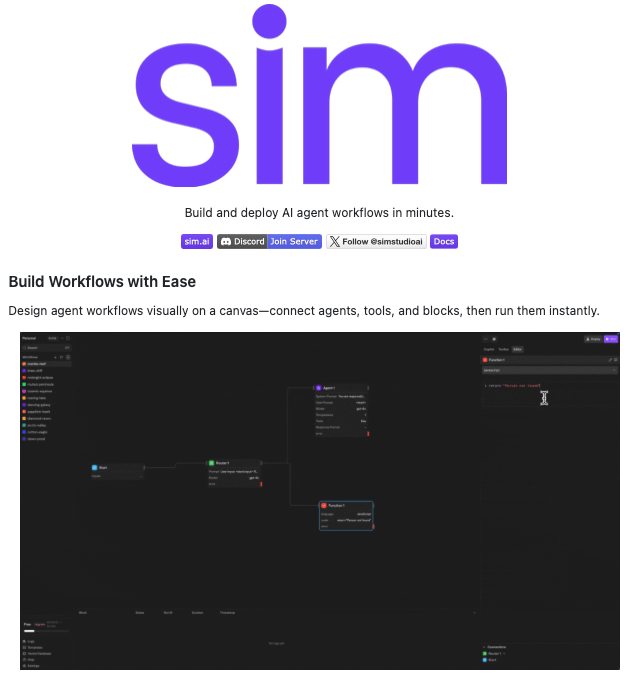

5. Sim

Sim is a visual workflow builder for agent pipelines and AI-driven processes, with an AI copilot to help generate and modify flows.

Workflows are designed as executable graphs, with strong support for integrations and detailed execution tracing for debugging.

Key Features:

Drag-and-drop workflow design

AI copilot for generating agent flows

Execution tracing for inspection and debugging

Each tool approaches agents differently, from automated execution to supervised workflows and RAG-driven systems. The right choice depends on how much control, visibility, and integration your use case requires.

That’s a Wrap

That’s all for today. Thank you for reading today’s edition. See you in the next issue with more AI Engineering insights.

As we close out the year, we at AI Engineering wish you a happy New Year. We’re excited to dive into deeper technical topics and new directions in the months ahead.

PS: We curate this AI Engineering content for free, and your support means everything. If you find value in what you read, consider sharing it with a friend or two.

Your feedback is valuable: If there’s a topic you want to learn more about or something you’d like us to cover in the new year, reply to this email. We’re building this for you, and your input helps shape what comes next.

WORK WITH US

Looking to promote your company, product, or service to 160K+ AI developers? Get in touch today by replying to this email.